December 14, 2015

Virtual Earth Observing: Simulating the Globe in 1-Mile Segments

NASA Goddard Space Flight Center (GSFC) scientists recently completed the highest-resolution global weather simulation ever run in the U.S. Using the Goddard Earth Observing System (GEOS-5) model the simulation carved the atmosphere into 200 million segments each just 1 mile (1.5 kilometers) wide. This trailblazing computation ran on the Discover supercomputer at the NASA Center for Climate Simulation (NCCS).

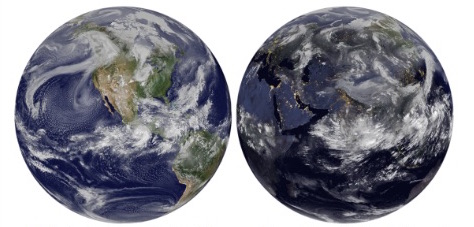

outgoing longwave radiation (right) from a 1-mile/1.5-kilometer (km)

Goddard Earth Observing System (GEOS-5) global atmospheric

model simulation for June 16, 2012. Visualizations by

William Putman, NASA GSFC.

"At 1-mile resolution we can see weather and other atmospheric phenomena at neighborhood scale,” said William Putman, model development lead at GSFC’s Global Modeling and Assimilation Office (GMAO). "That is also the resolution of most modern satellite observations. By creating a virtual Earth observing system, our simulation connects neighborhoods around the world to regional and global events influencing local weather and climate.”

The GEOS-5 simulation covers June 15–18, 2012. Putman chose that period because of severe weather outbreaks across the U.S. Indeed, the simulation spawns large thunderstorm complexes with over-shooting cloud tops indicating tornado-producing storms. Another standout is a sea of intricate stratocumulus clouds blanketing the oceans.

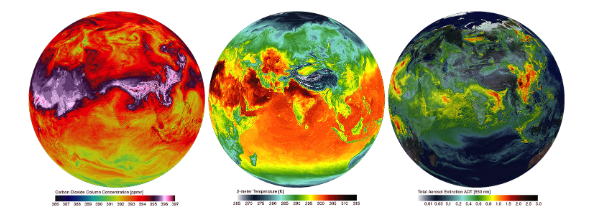

Beyond weather, this is the first ultra-high-resolution global simulation to include interactive aerosols and carbon. In often-mesmerizing visualizations, "fine aerosol particles stream from industrial plants and fires around the globe, and waves of carbon dioxide drift from their sources and engulf the world,” Putman said.

NASA Center for Climate Simulation’s Discover

supercomputer. Photo by Bill Hrybyk, NASA GSFC.

Hosting a simulation of this magnitude and complexity fell to the NCCS Discover supercomputer’s biggest and tenth scalable unit (SCU10)—an SGI Rackable cluster with 30,240 processor cores. SCU10has a peak speed of 1.2 petaflops, or 1.2 quadrillion (thousand trillion) floating-point operations per second.

"This is our first SCU capable of performing a petaflop by itself," said Dan Duffy, NCCS high-performance computing lead. "Months before installation we started planning with the GMAO how we could push GEOS-5 to the highest resolution possible."

SCU10’s 30,240 cores are organized into 1,080 nodes, each containing two 14-core Intel Haswell processors. GMAO scientists and software engineers trimmed GEOS-5’s memory footprint to fit more than 5 terabytes of global data into the 128 gigabytes of available memory per node. The 4-day simulation produced nearly 15 terabytes of output each day for a total of 60 terabytes.

As Putman noted, the only other global weather simulation at this scale ran on Japan’s 10.5-petaflops K computer, which cost $1.25 billion to develop and build. The Nonhydrostatic ICosahedral Atmospheric Model (NICAM) used.87-kilometer (km) resolution. By comparison, NASA completed its1-mile/1.5-kmsimulation on a cluster costing under $10 million.

The 1-mile/1.5-km simulation is the newest example of the GMAO continually exploring the limits of computational capacity with GEOS-5. The NCCS partners in this endeavor by granting pioneer access to newly installed Discover SCUs. The GMAO-NCCS collaboration not only promotes pioneering simulations but also identifies hardware and software issues before releasing the SCUs to the user community.

surface temperature (center), and fine particles (right) from a 1-mile/1.5-km

GEOS-5 model simulation for June 16, 2012.

Visualizations by William Putman, NASA GSFC.

In 2009, Putman ran a 3.5-km global simulation on Discover using 4,128 Intel Harpertown cores. It was the first global cloud-permitting simulation with GEOS-5 and the highest resolution run of any global model at that time. In a tradition begun with the first numerical weather simulation in 1950,taking this leap in resolution the model could only simulate 1 day per wall clock day.

Over the next several years growing numbers of new-generation processors and software innovations enabled greater model throughput. In 2010, a 5-km simulation running on 3,750 Intel Nehalem cores achieved 5 days per day. By 2013, the 2-year, 7-km Nature Run simulation leveraged 7,680 Intel Sandy Bridge cores to yield 12 days per day.

With more than 20 times the Nature Run’s resolution, the 1.5-km simulation of 2015 resumed the 1 day per day tradition. Such throughput is impractical for weather forecasting when models must finish 10-day simulations every 6 hours. Adding data assimilation to incorporate satellite and other observations demands yet more computing power.

Accordingly, the world’s leading operational weather centers currently use model resolutions in the 12- to 16-km range. The GMAO will soon run its operational forecasts at 12.5-km resolution across 7,000 cores on Discover. Requiring a staggering 10 million cores, 1.5-km forecasts would not be routine until the year 2030. "We are excited to get a preview of forecasting capability 15 years in advance," Putman said.

Jarrett Cohen

NASA Goddard Space Flight Center

Contacts | |

|

William Putman Model Development Lead Global Modeling and Assimilation Office NASA Goddard Space Flight Center william.m.putman@nasa.gov 301.286.2599 |

Dan Duffy High-Performance Computing Lead NASA Center for Climate Simulation NASA Goddard Space Flight Center daniel.q.duffy@nasa.gov 301.286.8830 |

More Information | |

| Virtual Earth Observing: The Globe in 1-Mile Segments |

Global Modeling and Assimilation Office |