Accelerating Science with AI and Machine Learning

Overview

Using the NASA Center for Climate Simulation (NCCS) Advanced Data Analytics Platform (ADAPT), NASA researchers are accelerating scientific research with artificial intelligence (AI) and machine learning (ML).

Background

NASA data holdings are growing at a geometric rate, including an estimated 100 petabytes of Earth science imagery alone. Traditional methods of analyzing these data are insufficient to produce answers in a reasonable time frame. Thus, scientists have turned to artificial intelligence and machine learning (AI/ML) methods to facilitate analyzing the massive volumes of data.

Focus on the Scientist

NASA senior scientist Mark Carroll — from NASA Goddard Space Flight Center’s Computational and Information Sciences and Technology Office (CISTO) — is leading the Data Science Group (DSG) and working with scientists and industry to take advantage of all available resources to accelerate science, including AI/ML methods. Over the past 10 years, the DSG has built data analytic services for climate reanalysis data, including the MERRA Analytic Service, Earth Data Analytics Service, and CREATE-V.

Projects

Dr. Carroll and the DSG manage several projects that use various AI/ML methods, along with high-performance computing (HPC), to derive products and inferences from image products including satellite imagery and model outputs. The DSG is developing a unified approach to data science that incorporates AI/ML. Their goal is to generate a software codebase that uses ML techniques, is optimized for HPC systems, and is reusable for many different projects.

Currently, the DSG is working with scientists to accelerate a variety of projects. Examples include supporting landslide detection with commercial, high-resolution data; automatic detection of lake depth from Landsat data; routine mapping of surface water extent for reservoir management; and using maximum entropy modeling to predict habitat suitability for particular species.

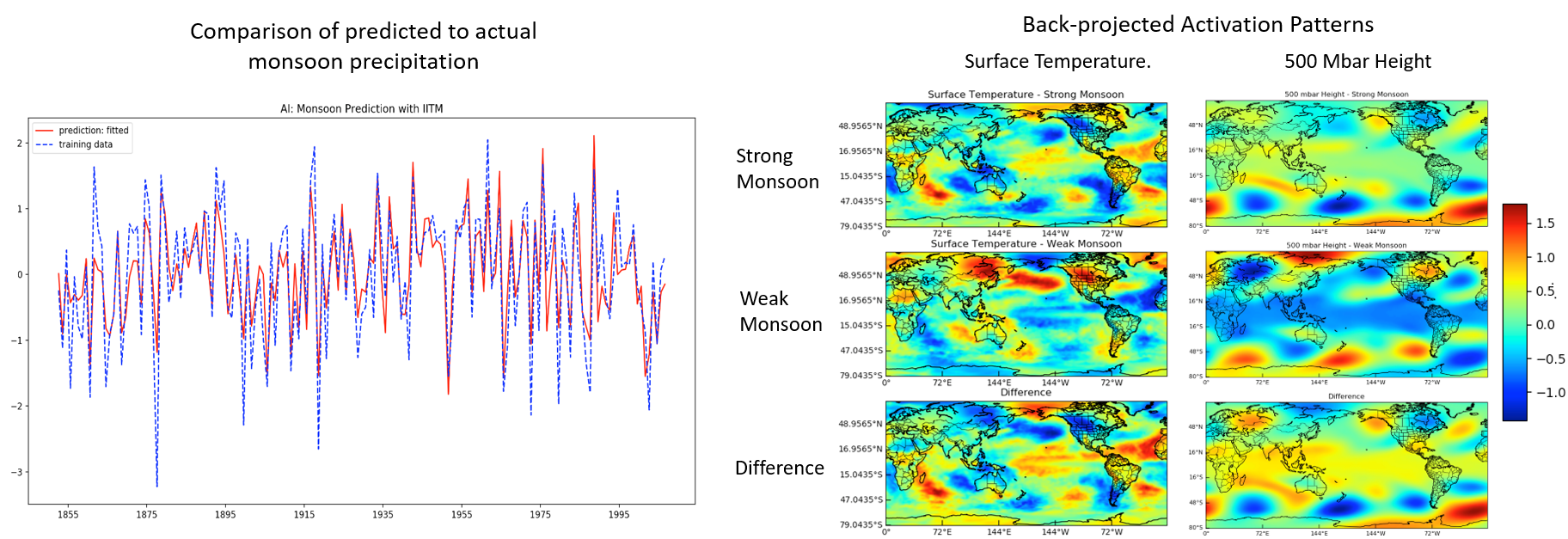

Figure 1 - The goal of this research is to explore teleconnections between features in climate data using machine learning techniques. This two-layer, artificial neural network predicts the strength of upcoming monsoon precipitation using 100 years of temperature and precipitation data as input. This research is instrumental in advancing NASA’s capabilities for learning new things from large datasets by taking advantage of the high-performance computing available at the NASA Center for Climate Simulation. Graph and map by Jian Li and Thomas Maxwell, NASA/Goddard.

MACHINE LEARNING PROJECT EXAMPLES

Physics-based models for weather and climate prediction are the standard because these models are trained to incorporate Earth system information and model the natural world as closely as possible. As the length of the observational record increases, it becomes possible to use ML to predict conditions based on past real-world events. For example, Figure 1, above, shows a prediction of monsoon strength using artificial neural networks (ANNs) (first-order mathematical approximation of the human nervous system) and climate data. This ML model identifies teleconnections (causal connections between meteorological or other environmental phenomena that occur a long distance apart) between the climate variables surface temperature and atmospheric pressure and the strength of the ensuing monsoon season.

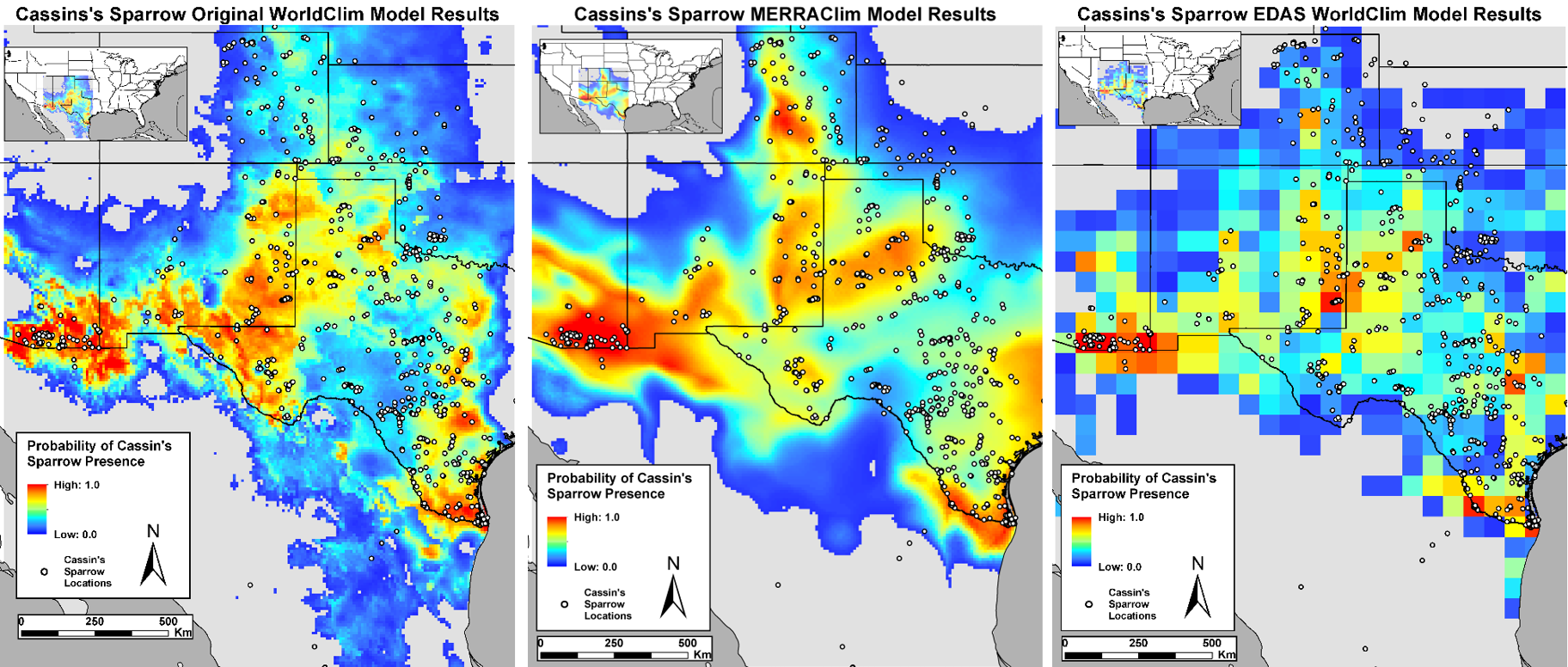

Figure 2, below, shows early results of habitat suitability mapping, which demonstrate that using MERRA inputs can yield comparable results to the standard WorldClim data inputs. MERRA is a climate reanalysis dataset that provides hundreds of climate variables produced by NASA’s GEOS-5 climate model. WorldClim provides 19 climate variables (temperature, precipitation, etc.) that can be used as discriminatory variables in diverse studies. The next step is to include the full suite of MERRA variables in the model using a Monte Carlo simulation to identify key variables not in the WorldClim data that may contribute to improving the habitat suitability map.

Figure 2 - Species Distribution Models (SDM) use climate information (temperature, precipitation, etc.) to identify habitat that is suitable for a species of interest. The goal of this research is to use MaxEnt software with three different input datasets (WorldClim, WorldClim augmented with MERRA, and MERRA) to create habitat suitability maps for Cassin’s Sparrow in North America. The results indicate that MERRA can be used to produce comparable results to WorldClim (the standard dataset used for SDM). Data produced by Thomas Maxwell, Jian Li, and Roger Gil, NASA/Goddard. GIS maps by Mary Aronne, NASA/Goddard.

Above: Mark Carroll presenting AI for Mapping Forest Patches in Sub 5-Meter Resolution Satellite

Imagery at the 2019 American Geophysical Union (AGU) Fall Meeting in San Francisco.

Photo by Jarrett Cohen, NASA/Goddard.

Why HPC Matters

Code development occurs in ADAPT and the HPC environment at NCCS, but the design process includes consideration for running code in the commercial cloud. To maximize the portability of the code to different processing environments and ensure the code is parallelized to optimize performance, all code is developed with object-oriented computer science design principles.

“For the first time,” Dr. Carroll observed, “petabytes of data are co-located with large amounts of compute. This enables a new paradigm in scientific discovery, where larger, more comprehensive questions can be posed and answered. MERRA/Max is an example of what can be done by bringing together compute and data storage.

“Taking this a step further, we are exploring the packaging of the code in containers — an IT construct that allows the packaging of the code with the necessary environment to run that code — making it easier to use on disparate systems,” he added. “Containers will maximize code portability, both within the NCCS HPC systems and in external systems including commercial cloud. This is particularly important now because NASA is migrating archive holdings into the commercial cloud. By developing processes that can operate with minimal setup and are agnostic to the specific compute environment, this is potentially game-changing, in terms of accelerating science.”

Related Links

- Accelerating Water Map-Making for the Arctic Boreal Vulnerability (ABoVE) Field Campaign

- Accelerated Data Processing Saves Time, Allows for More Thorough Research

- Advanced Data Analytics Platform (ADAPT)

- MERRA Analytic Service

- Earth Data Analytics Service

- CREATE-V

- Modern-Era Retrospective analysis for Research and Applications, Version 2 (MERRA-2)

Sean Keefe, NASA Goddard Space Flight Center