Cloud in a Container Deploying OpenStack

Hoot Thompson & Jonathan Mills

Overview

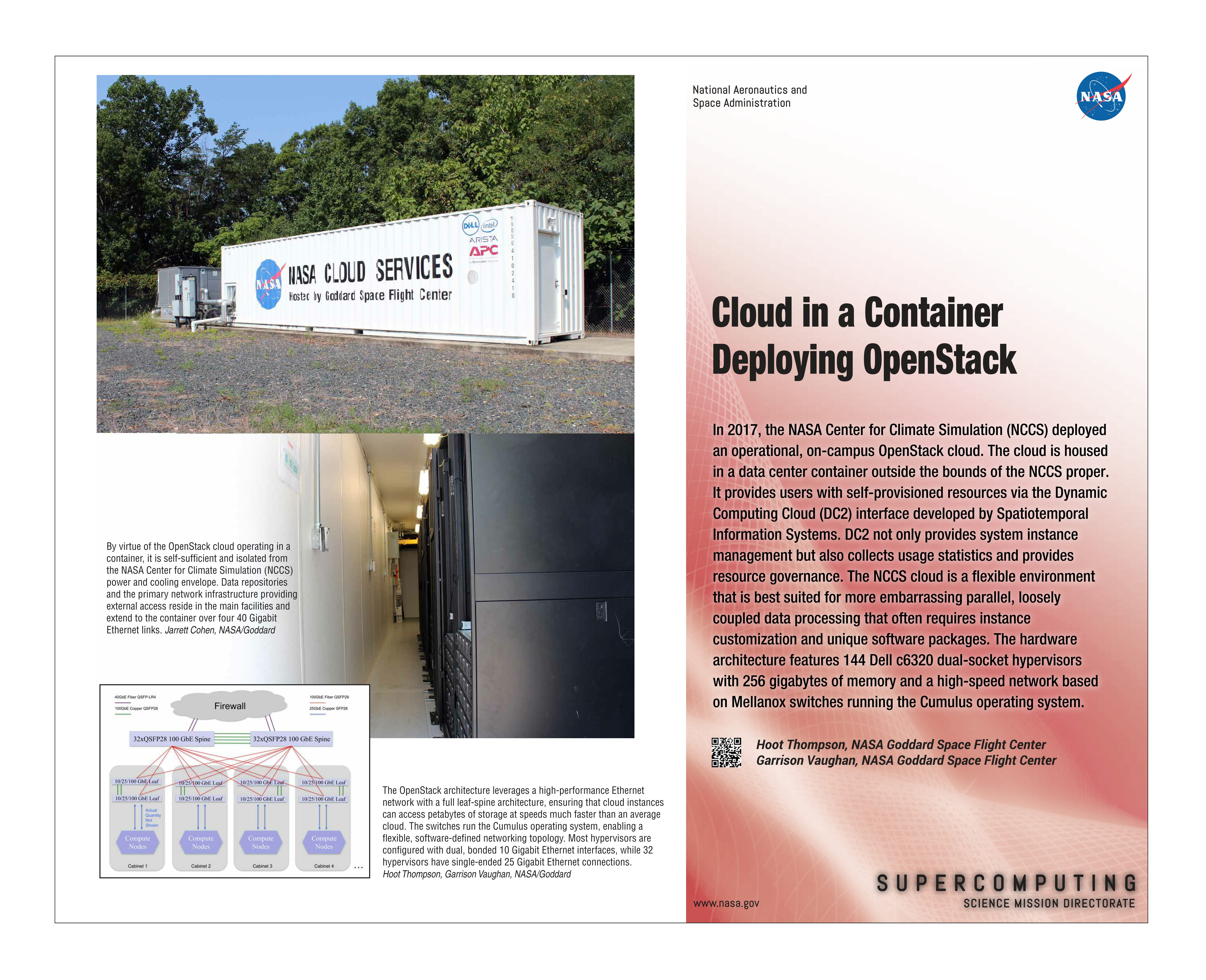

In 2017, the NASA Center for Climate Simulation (NCCS) deployed an operational, on-campus OpenStack cloud. The cloud is housed in a data center container outside the bounds of the NCCS proper. It provides users with self-provisioned resources via the Dynamic Computing Cloud (DC2) interface developed by Spatiotemporal Information Systems. DC2 not only provides system instance management but also collects usage statistics and provides resource governance. The NCCS cloud is a flexible environment that is best suited for more embarrassingly parallel, loosely coupled data processing that often requires instance customization and unique software packages. The hardware architecture features 144 Dell c6320 dual-socket hypervisors with 256 gigabytes of memory and a high-speed network based on Mellanox switches running the Cumulus operating system.

Project Details

The NCCS conducted a competitive procurement, which led to the selection of Dell hypervisors and Mellanox switch components. From there, the NCCS team leveraged the knowledge gained from building a Mitaka-based prototype to instantiate the container-based cluster. The cluster uses xCAT, a proven, reliable, and flexible tool for deploying bare metal that is familiar to many high-performance computing (HPC) system administrators. In addition to xCAT, the NCCS has used a combination of upstream and in-house Puppet modules to create a system for deploying and managing OpenStack. The system has proven to be highly reproducible and fine-grained in its configuration capability. By virtue of the OpenStack cloud operating in a container, it is self-sufficient and isolated from the NCCS power and cooling envelope. Data repositories and the primary network infrastructure providing external access reside in the main facilities and extend to the container over four 40 Gigabit Ethernet links. The DC2 interface is the user gateway that enables spinning up and managing instances and obtaining usage statistics and potential billing information.

Results and Impact

The NCCS has implemented the following technologies with a high degree of confidence: highly available (HA) Neutron Distributed Virtual Routers (DVR); SSL encryption of all network traffic within the control plane; HA controller nodes that are active/active behind HAProxy; failover of HAProxy services with Pacemaker, Corosync, and STONITH; secure in-tenant access to very large datasets, with the General Parallel File System (GPFS) bridged to virtual machines (VMs) using NFSv4; and the DC2 user interface, which hides unnecessary features from the user and is generally easier to use than Horizon.

Why HPC Matters

Cloud technologies are entering traditional HPC centers as a means of addressing the growth of new communities within computational science. Scientists are far from homogeneous in their abilities to leverage large, distributed-memory jobs. Therefore, the NCCS views OpenStack as a complementary resource to Discover, its 3.5-petaflops supercomputer. OpenStack allows the NCCS to create and tailor environments to the needs of specific communities and in a secure and minimally impactful way.

What’s Next

The current objective is to expand the menu of cloud-based services (e.g., web, database, and storage capabilities) available to the user community. The OpenStack deployment is actually a follow-on to the managed virtual machine system environment called ADAPT (Advanced Data Analytics Platform), and the intent is to assimilate the original ADAPT resources into the OpenStack management infrastructure.