// NCCS On-Premise Containers

- CONTAINERS IN A NUTSHELL

- CONTAINERS AT THE NCCS

- USING IMAGES FROM TRUSTED CONTAINER REGISTRIES

- TOOLS

- BUILDING CUSTOM IMAGES AT THE NCCS

- Sylabs.io Remote Cloud – Singularity (Preferred)

- NCCS GitLab CI – Docker and Singularity (Ideal for Sharing Containers)

- Locally – Any Container

- BEST PRACTICES

- REFERENCES

CONTAINERS IN A NUTSHELL

Containers are small versions of operating systems that are meant to speed up the process of shipping new versions of software. As part of CI/CD, an application can be compiled and run from a container in any stage of the Software Development Life Cycle for testing or production. As part of DevOps, containers allow greater flexibility for the deployment of applications on immutable runtime systems, providing full reproducibility. As part of data science, containers allow a means of sharing code and artifacts for research reproducibility. The main idea of containers is the ability of running on a virtualized layer that can be executed from many hardware resources without any significant modifications, enabling users to have full control of their environment. This means that you do not have to ask your cluster admin to install anything for you — you can put it in a container and run.

CONTAINERS AT THE NCCS

The NCCS now provides the ability to run containers on the Discover, ADAPT, and Prism high-performance computing (HPC) systems. At this time, Singularity and Charliecloud are the only container runtimes available at the NCCS, with ongoing research on supporting other container runtimes. There are three main ways of running container images, these being:

- Pulling base images from a Trusted Container Registry,

- Building your own custom images, and/or

- Transferring the Container Binary to an NCCS system.

The NCCS currently provides ways via NCCS GitLab of building both Docker and Singularity containers via CI/CD (Continuous Integration/Continuous Delivery) processes in order to test the reliability of the images before deployment, and to make them available for your team or other users to download. It is important to know that any Docker container can be converted to Singularity format when being pulled to the local systems at the NCCS. Thus, there is no need for building new images if the users current workflow leverages Docker or other Open Container Initiative format.

Using Images from Trusted Container Registries

One of the great advantages of containers is the sheer number of publicly available images for all kinds of software. The availability of these images allow users to get started quickly, but can negatively affect both the creation and runtime of the container. Some examples of these are: not being able to control what software the public image includes/removes, multiple dependency layers, not being able to control vulnerabilities in production environments, and end of support from the public images. The NCCS has adopted a hybrid system where base images are taken from the public registry and are modified through continuous integration to comply with Agency standards. These images are available in NCCS's internal container registry, together with a set of trusted Container Registries outlined below.

NCCS Container Registry: catalog of Agency compliant application images for building custom containers and user-interactive workstations.

NVIDIA NGC Container Registry: catalog of AI/ML and HPC related containers provided by NVIDIA.

All NCCS-approved images are based on the following operating systems:

- CentOS7

- CentOS8

- Ubuntu18 Bionic

- Ubuntu20 Bionic

- Debian9

- Debian10

- SLES12 SP5

- Alpine 3

All containers have the necessary NASA baselines applied to them and also have the NASA certs loaded into their certificate store. This makes it easier for containers to interact with GitLab or other NASA tools used within the container. In order to allow for more streamlined updates, all images use repositories available to the operating system. This approach means less compiling from source and quicker image builds. In the NCCS Containers repository, there are a vast number of images to use, from the base flavors to GoLang to PostgreSQL. These ensure that the user has the necessary NASA-specific security controls applied and provide a better level of security to the container, while maintaining the stability of using images that have gone through extensive regression testing for operational validation.

Base images are available through the format listed below. These images can be pulled directly into your environment, or be used as base images to start building any application inside a container.

gitlab.nccs.nasa.gov:5050/nccs-ci/nccs-containers/base/**operating_system_version**

gitlab.nccs.nasa.gov:5050/nccs-ci/nccs-containers/**application**/nccs-**operating_system_version**-**application_version**

Examples:

gitlab.nccs.nasa.gov:5050/nccs-ci/nccs-containers/base/centos7

gitlab.nccs.nasa.gov:5050/nccs-ci/nccs-containers/base/debian10

gitlab.nccs.nasa.gov:5050/nccs-ci/nccs-containers/openjdk/nccs-debian9-openjdk8

Have an image you would like to see in the NCCS Container Registry? Submit an issue in NCCS Containers repository, create a custom one and submit a merge request, or submit a ticket to NCCS Support at support@nccs.nasa.gov. An operating system and applications support matrix is included in the NCCS Containers repository.

Tools

Singularity

Singularity is a container platform created to run complex applications on HPC clusters in a simple, portable, and reproducible way. Singularity containers are executed as a single binary file based container image, and are easy to move using existing data mobility paradigms and with no root owned daemon processes. To use Singularity on ADAPT, submit a ticket to support@nccs.nasa.gov with a list of VMs where Singularity is required. Singularity is already available by default on Discover, ADAPT, and the Prism GPU Cluster by loading the singularity module with:$ module load singularity

For example, to pull the CentOS7 GCC8 image from the NCCS Container Registry:$ singularity pull docker://gitlab.nccs.nasa.gov:5050/nccs-ci/nccs-containers/gcc/nccs-centos7-gcc8:latest

Another example would be pulling NVIDIA's HPC SDK container from the NGC Container Registry:$ singularity pull docker://nvcr.io/nvidia/nvhpc:21.2-devel-cuda_multi-ubuntu20.04

Singularity stores cache files under /home/$user/.singularity to speed up containers download processes. Due to limited storage on /home/, it is preferred that Singularity cache points to the user $NOBACKUP space. For this, setup the following two variables in your environment and/or shell source files:export SINGULARITY_CACHEDIR="$NOBACKUP/.singularity"

export SINGULARITY_TMPDIR="$NOBACKUP/.singularity"

There are several ways of interacting with Singularity containers. The most common is getting shell inside the container:$ singularity shell $container_name

You may also require additional local filesystem paths to be available from inside the container, for which the "-B" option is utilized. In the following example we include the $NOBACKUP space of user johndoe inside the container:$ singularity shell -B $NOBACKUP:$NOBACKUP $container_name

For more in-detail tutorials, feel free to access the NCCS Containers repository.

By default, Singularity binary containers that are run at the NCCS will be converted to sandbox format on the fly. In order to streamline the conversion, you may want to convert the container to a sandbox from the beginning. To do so run the following:

$ singularity build --sandbox mycontainer mycontainer.sif

This will create a 'mycontainer' directory, from which the container can be executed as usual.

$ singularity shell mycontainer

Charliecloud

Charliecloud uses Linux user namespaces to run containers with no privileged operations or daemons and minimal configuration changes on center resources. It provides user-defined software stacks (UDSS) for HPC centers with great usability and comprehensibility. To use Charliecloud on the ADAPT and Prism clusters, submit a ticket to support@nccs.nasa.gov with a list of virtual machines (VMs) where Charliecloud is required. Charliecloud can be used in Discover by loading the singularity module with:$ module load charliecloud

For more in-detail tutorials, feel free to access the NCCS Containers repository.

Building Custom Images at the NCCS

Building containers from configuration files require elevated privileges. Thus, the NCCS offers 3 ways of building containers that can be ran locally at the NCCS.

Sylabs.io Remote Cloud – Singularity (Preferred)

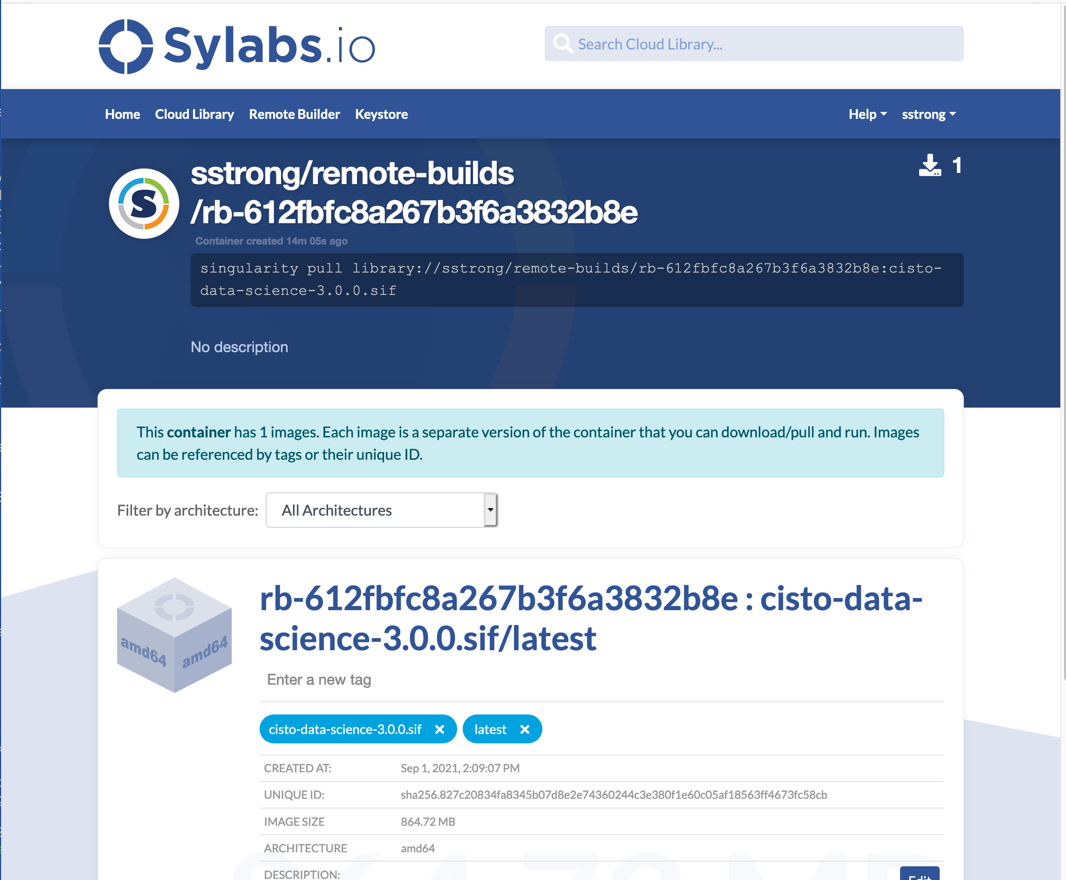

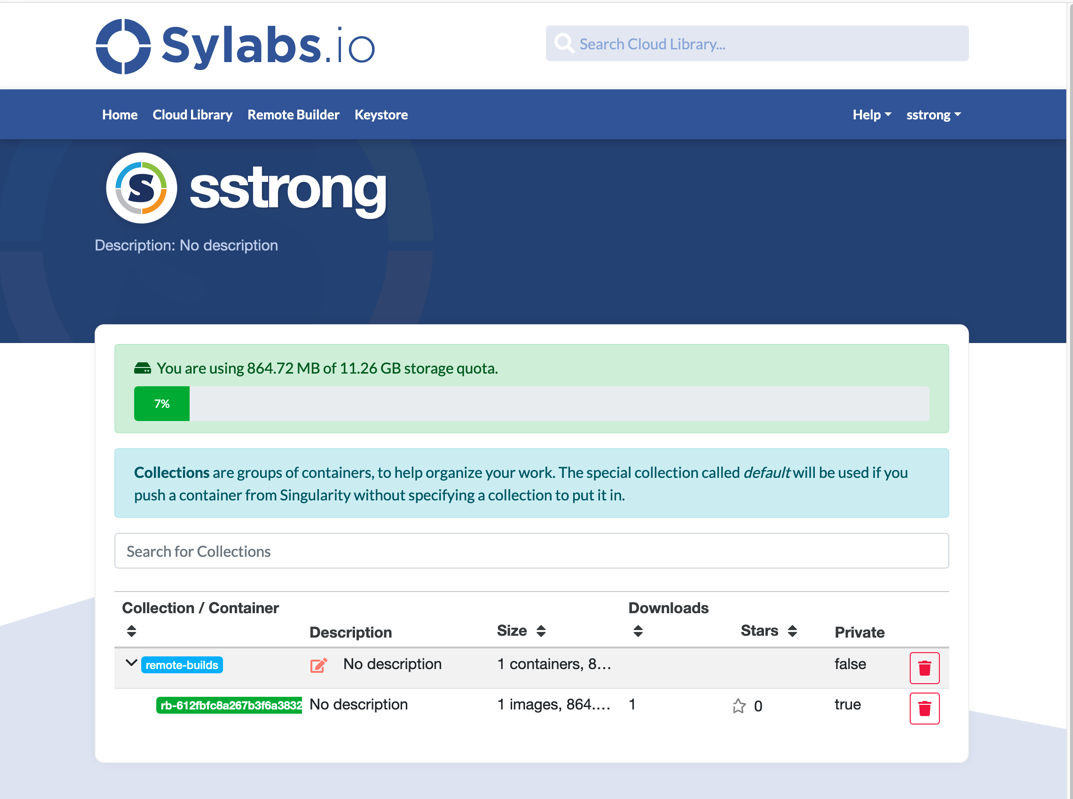

Sylabs.io provides remote building capabilities that can be leveraged from inside the NCCS. Using the Remote Builder, you can easily and securely create containers for your applications without special privileges or set up in your local environment. The Remote Builder can securely build a container for you from a definition file entered here or via the Singularity CLI.

- Go to: https://cloud.sylabs.io/ and click "Sign in" (top right).

- Click on one of your login IDs from the “Sign in”.

- Select “Access Tokens” from the drop down menu.

- Enter a name for your new access token, such as “test token” and click the “Create a New Access Token” button.

- Click “Copy token to Clipboard” from the “New API Token” page (at bottom of Account Management page).

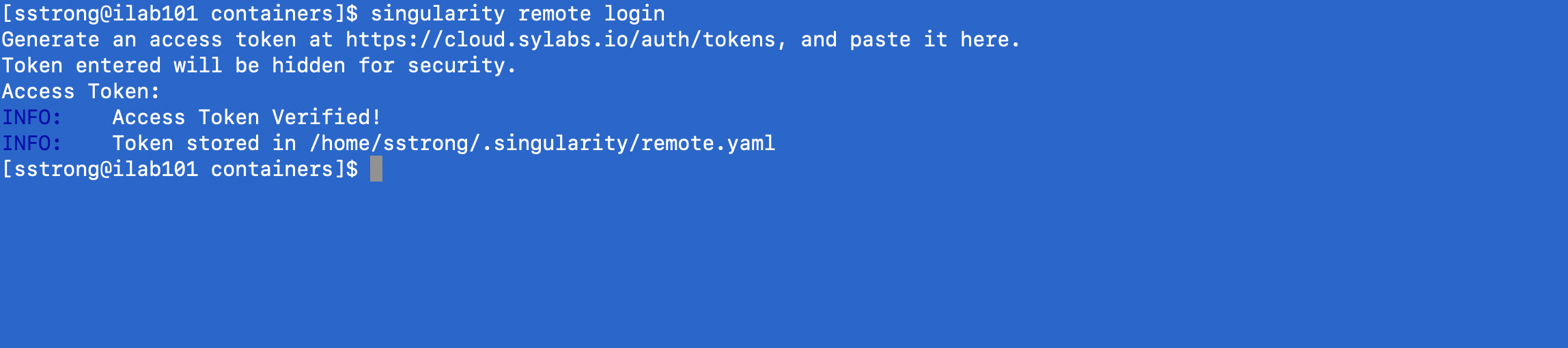

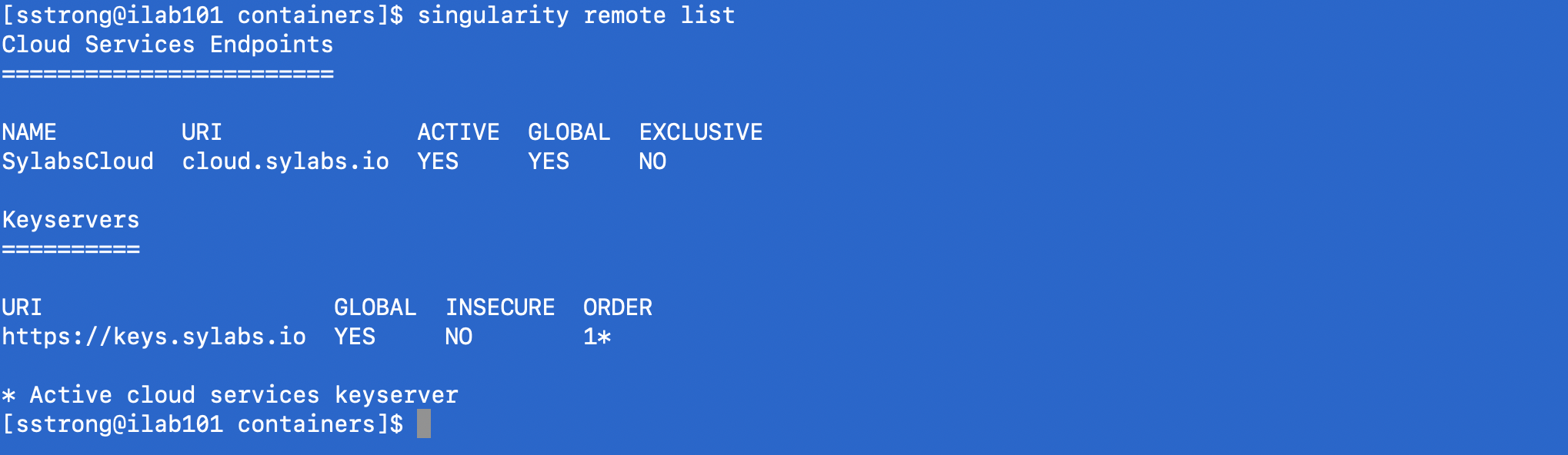

- If you have not done so, run the command 'module load singularity'. Next, from the NCCS system you are working from (e.g. gpulogin1), Run 'singularity remote login' and paste the access token at the prompt.

- Once your token is stored, you can check the status of your connection to the services with the command 'singularity remote list'

- Now that you are authenticated, you’re ready to build a container remotely. You have the option of building a sandbox straight out of the build process, or to only build a container binary.

- Container sandbox: 'singularity build --remote --sandbox your-container-name.sif your-container-name.def'

- Container binary: 'singularity build --remote your-container-name.sif your-container-name.def'

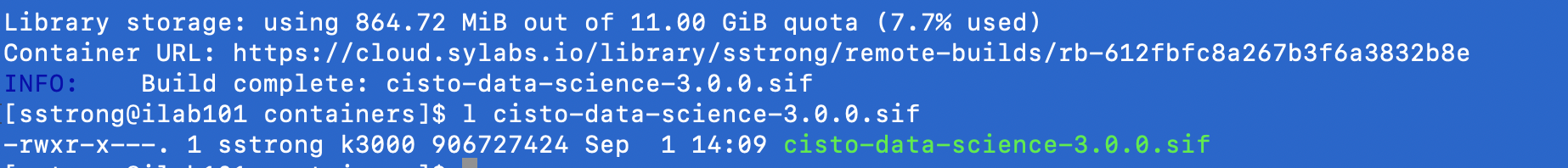

- Check on your local directory to see the just built container image. A '.sif' file should exist in the current working directory.

- The URL is a link to the remote container along with build log.

- To see all your containers, navigate to home and click on “My Containers”.

NCCS GitLab CI – Docker and Singularity (Ideal for Sharing Containers)

The NCCS has enabled a set of Continuous Integration systems that allow users to build containers in unprivileged environments and upload the container images to NCCS Container Registry. To enable the Custom Build and Container Registry features, submit a ticket to support@nccs.nasa.gov with the link to your NCCS GitLab Repository. If you do not have a GitLab account, visit the following GitLab Instructional for requesting access to the service.

The creation of custom images is highly encouraged. There are four build servers dedicated to building container images. Once a Container Registry is configured for your project, you must create a .gitlab-ci.yml file that points to the location of the container definition files. A template of this .gitlab-ci.yml is available in the NCCS Containers repository, where you will only need to remove the existing entries and add your own with either one of the following two tags to tell the GitLab Runner to use one of the build servers: "build", "deploy". An instructional will be available in a later date documenting this process.

How to use NCCS GitLab CI:

- Create a new repository on https://gitlab.nccs.nasa.gov.

- On your repository menu, Click on "Settings", and then "General"

- To enable the Container Registry for your project, Click on "Visibility, project features, permissions", and toggle/enable the "Container registry" option. Click on "Save changes".

- Create or push your configuration file to the repository (Singularity.def or Dockerfile).

- From the GitLab interface of your repository, Click on "+/". Then, Click on "New file".

- Add the ".gitlab-ci.yml" file to your repository.

- From the Web Interface: Click on "Select a template type", and select ".gitlab-ci.yml" from the dropdown menu. Then Click on "Apply a template" and search for the template of your choice.

- For Docker: Docker-Build

- For Singularity: Singularity-Build

- From the Command Line: Copy the contents of the ".gitlab-ci.yml-template" file into the tree of your repository.

- Replace the email and variables elements in the .gitlab-ci.yml with your desired values, or leave them as default.

- CONTAINER_NAME: "$CI_PROJECT_NAME-container" # container name, e.g. mycontainer

- CONFIGURATION_LOCATION: "$CI_PROJECT_DIR/Dockerfile" # container configuration, e.g. packages/Dockerfile

- CONTAINER_VERSION: "latest" # container version, e.g. 1.0.0

- Click on "Commit changes" and the container should start building.

- Expect a "Green" sign when your pipeline is done, a "Red" sign means something went wrong. You can check the status of your pipeline by clicking on the Sign button.

- Then, from the NCCS system you are working from (e.g. gpulogin1), we will pull the container that was just built. Load the singularity module if you have not done so, `module load singularity`. Replace the values inside ${} with the actual content.

- For Docker, run:

'singularity pull docker://gitlab.nccs.nasa.gov:5050/${your_username}/${your_repository_name}/${your_container_name}:${your_container_version}' - For Singularity, run:

'singularity pull oras://gitlab.nccs.nasa.gov:5050/${your_username}/${your_repository_name}/${your_container_name}:${your_container_version}' - Check on your local directory to see the just built container image. A '.sif' file should exist in the current working directory.

Locally – Any Container

Users can build custom images in the their local workstations and transfer the resulting files to the NCCS via any network transferring tool such as SCP or RSYNC. You will need elevated privileges on your local workstation to accomplish this.

How to build containers locally:

- Create the definition file of your preference.

- Build the container from your system, 'sudo singularity build lolcow.sif lolcow.def'

- Transfer the container to the NCCS, as an example 'scp lolcow.sif adaptlogin.nccs.nasa.gov:~/'

Best Practices

A PDF outlining NCCS Containers best practices can be found here:

NCCS Containers Best PracticesReferences

See also this case study using NCCS resources: